An Empirical Comparison of On-Screen Keyboards

Thomas Tullis, Ellen Mangan, and Richard Rosenbaum

Fidelity Investments

Boston, MA

Human Factors and Ergonomics Society 51st Annual Meeting, Baltimore, MD, October 1-5, 2007

Abstract

Eight different designs for an on-screen keyboard were compared. Some of the factors manipulated included QWERTY vs. alphabetic layout, key-caps appearance vs. none, and mouseover feedback or not. We found that a design featuring two complete QWERTY keyboards (upper and lower case) yielded the best performance, but the designs that looked the most like a standard keyboard were preferred.

Introduction

On-screen keyboards might be used in a variety of situations where it is not possible, practical, or even wise to use a normal keyboard. Perhaps the most widely known situation is on a PDA that does not have a keyboard. Tablet PCs are another example. But even with desktop PCs there are cases where an on-screen keyboard might be more appropriate to use. One example is for someone who has difficulty using a normal keyboard due to a disability or injury. A less widely known situation is one where the security of the PC in use might be compromised, and the user needs to login to a remote system to check email or perform other transactions. This might particularly be true when using a public PC.

So-called "keyboard sniffers" record the keystrokes typed on a PC for future analysis. These can be small hardware devices that are installed between the keyboard and CPU or they can be "spyware" software that records keystrokes in the background. In either case, the user may not be aware of the existence of the keyboard sniffer. By analyzing the log of keystrokes from a keyboard sniffer, someone might be able to detect repeated patterns which represent a user ID and password being used to access a remote system. One way to thwart a keyboard sniffer is to use an on-screen keyboard for the entry of very sensitive items, such as a password for entry to a remote system. In this case, the sensitive information is actually entered via clicks at certain coordinates on the screen rather than via the keyboard, so the keyboard sniffer has nothing to record.

There have been a few previous studies investigating certain types of

on-screen keyboards (e.g., MacKenzie & Zhang, 1999; Sears et al 2001;

Zhai et al, 2001), but none have compared a broad range of on-screen keyboards

to each other.

Methodology of the Study

Overview

The goals of the study were to assess user performance as well as subjective preference for a variety of on-screen keyboards in the entry of a password. We conducted it as an online study on our company's Intranet in order to get a large number of participants. We let the participants choose the password they would use for the study from a set that we provided. This was done to reduce the variability in the passwords used and to prevent the use of extremely simple (and non-secure) passwords. The passwords that we provided all met standard criteria for highly secure passwords, meaning that they contained all of the following types of characters:

- Upper- and lower-case English character(s)

- Digit(s) (0-9)

- Special character(s), e.g., #$%^&*(

Specifically, all of the passwords we provided contained two randomly selected English words with one upper-case letter each, two digits, and one or two special characters. The following passwords were provided for the participants to choose from:

- Bagel_Ocean38

- 4PlaneBoot5?

- !Pixel7Flute2

- 9Draft#Busy6

- Leafy4Speak>7

- !Heavy*List19

The On-screen Keyboards

As shown in Figures 1 through 8, we created eight prototypes of various on-screen keyboards that utilized different layouts, visual appearance, ordering of the characters, and visual feedback. Two of the on-screen keyboards included a random feature (#6 and #7). This is for a possible security advantage that might be offered by having the mouse clicks occur in slightly different locations on each login.

Figure 1: Condition 1: Alphabetic layout, no mouseover feedback, no key-caps.

Figure 2: Condition 2: Larger QWERTY layout, no mouseover feedback, key-caps.

Figure 3: Condition 3: Same as Condition #1 but with mouseover highlighting of the characters.

Figure 4: Condition 4: Two smaller QWERTY keyboards, mouseover highlighting, no key-caps.

Figure 5: Condition 5: Same as Condition #2 but with no key-caps.

Figure 6: Condition 6: Same as Condition #2 but with random shuffling of the top row of keys.

Figure 7: Condition #7: Same as Condition #8 but with a split keyboard where the two halves separate a random amount from each other.

Figure 8: Condition #8: Smaller QWERTY layout, no mouseover feedback, key-caps.

Figure 9: Control condition used in study.

Study Procedure

Each participant was randomly assigned to a condition set that consisted of the 8 on-screen keyboards randomly ordered so that any practice or fatigue effects would be distributed across the conditions. This was a within-subjects design, with each participant "logging in" four times with each on-screen keyboard condition.

Their first task was to select the password they would use for the rest of the study from a drop-down list displaying the six "strong security" passwords listed earlier. They then had to type that password. The program checked to make sure that the user truly had typed the password correctly, including letter case. If not, they were given error feedback and not allowed to proceed until they typed the password correctly. This was to ensure that the user understood the proper way to enter the password. Their chosen password was displayed to them on all subsequent pages below the area where they had to enter it. After this initial check, no feedback was given to the users about the accuracy of their entries (either in the control condition or the on-screen keyboard conditions).

Next, users were taken to the control condition, for normal entry of

their chosen password four times via the keyboard. Users then attempted

to login four times with each on-screen keyboard, and after each condition

they were taken to a rating page. Here they were asked to rate how easy

they thought that on-screen keyboard was to use on a 5-point scale.

Data Collected

For each condition we automatically recorded how long it took for each login attempt, the actual password entered on each attempt, and whether it was correct. After each condition we recorded the user-provided rating of the ease/difficulty of using that on-screen keyboard.

Results

Since data from the participants was recorded at various points during their completion of the study, we have data from different numbers of participants at these various points, as follows:

- 672 people started the study.

- 548-581 completed each of the eight on-screen keyboard conditions.

In analyzing the data, we adopted the following rules for excluding some data from the analyses:

- We excluded any trials where the user obviously did not make a valid attempt at entering the password chosen, such as any trials where the user keyed only 1 or 2 characters or keyed random characters.

- We dropped the time data for any trials where the user entered the password in less than 1 second. This only applied to the Control Condition, and was indicative of the user having copied the password to the clipboard and pasted it on the subsequent Control trials.

- We dropped the time data for any trials where the user took 100 seconds or longer. This was to detect any cases where the user got interrupted or otherwise distracted.

Time Data

Figure 10 shows the mean time that each login attempt took the participants for each of the on-screen keyboards as well as the Control condition.

Figure 10. Mean time per login attempt. Error bars represent the 95% confidence interval.

As expected, users were significantly faster entering the password via the normal keyboard (Control condition) than any of the on-screen keyboards. In fact, they were about twice as fast with the normal keyboard. All of the on-screen keyboards yielded somewhat similar times, with means ranging from 18 to 22 seconds. The two on-screen keyboards with a random feature (Conditions 6 and 7) took slightly longer than any of the others. Conditions 2, 4, and 8 yielded slightly shorter times than some of the other on-screen keyboards.

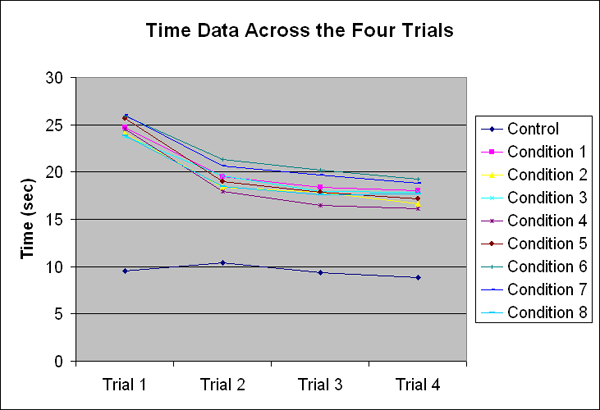

Figure 11 shows the average time for each condition across the four login

attempts. All of the on-screen keyboard conditions showed a significant

practice effect, with the greatest improvement in time coming between

the first and second logins. The Control condition showed almost no practice

effect.

Figure 11. Time per login attempt for each trial and each condition.

Error Data

Figure 12 shows the error rate for each of the conditions. These data represent the percentage of time the users entered a password that did not exactly match their chosen password, including letter-case errors, substitution errors, etc. It appears that case errors were the most common type (e.g., "Bagel_OCean38" instead of "Bagel_Ocean38").

Figure 12. Error rates in password entry for the conditions. Error bars represent the 95% confidence interval for the mean.

Overall, the error rates were relatively high. This could have been due to several factors:

- The participants were not using a password that they were already familiar with.

- The passwords that they could choose from were all highly secure passwords, with upper- and lower-case letters, digits, and special characters. In spite of security guidelines, most people do not use passwords this complex.

- The participants were not given feedback about the accuracy of their entry (after the very first entry, which did not count, that ensured the user knew the correct password).

The error rates for all of the on-screen keyboards were all significantly higher than for normal entry via the keyboard (the Control condition). But the error rate for one on-screen keyboard, Condition 4, was significantly lower than the error rate for all the other on-screen keyboards.

Subjective Ratings

There were two subjective ratings given to each on-screen keyboard: one immediately after using it and another at the end of the study after they had used all of the keyboards. The mean ratings given immediately after using each keyboard are shown in Figure 13.

Figure 13. Mean ratings of ease of use, on a 5-point scale, given immediately after using each on-screen keyboard. Higher values are better. Error bars represent the 95% confidence interval for the mean.

Conditions 2 and 8, which are the two normal on-screen QWERTY keyboards (larger and smaller), received significantly better ratings than all other on-screen keyboards except Condition 4. Conditions 6 and 7, which are the two with a random feature, received significantly worse ratings than most of the other on-screen keyboards.

Discussion

As often happens in usability studies, the performance data and subjective ratings show quite different patterns. Since the time data for the various on-screen keyboards did not show differences of any practical significance, we have focused more on the error data as the primary performance measure. It is clear from the error data that Condition 4 came out significantly better than all of the other on-screen keyboards. This condition used two complete QWERTY keyboards, one for upper case and one for lower case, stacked vertically. Key-cap appearances were not used, but visual highlighting on mouseover was used. The preference data, on the other hand, shows clearly that the users preferred the two on-screen keyboards which look the most like a traditional keyboard (Conditions 2 and 8). This is perhaps due to familiarity. Interestingly, though, Condition 4 (which yielded the best performance data) yielded the next highest ratings (after 2 and 8).

Overall, we believe Condition 4 appears to be the best alternative. We also believe that the following general conclusions can be drawn from this study:

- None of the on-screen keyboards studied came close to the level of performance for the Control condition (normal entry via the keyboard). Most users are simply too highly skilled at using a normal keyboard. Those motor skills do not translate well to an on-screen keyboard.

- The random features studied here (Conditions 6 and 7) negatively impacted both performance and subjective preference. Any benefit that such features might offer from a security perspective would have to be weighed against that.

- Taken together, the two on-screen keyboards that included mouseover highlight (3 and 4) came out better than most of the other on-screen keyboards. Many participants even commented that the highlighting helped.

- The on-screen keyboards that required the use of an on-screen Shift key (Conditions 2, 5, 6, 7, and 8) yielded higher error rates, as a group, than those that did not.

- Although the on-screen keyboard with the lowest error rate was one that used a QWERTY layout, the next-lowest error rate was for one that used an alphabetic layout (Condition 3). Therefore, we do not believe that a QWERTY layout is always better than an alphabetic layout for on-screen keyboards.

References

MacKenzie, I.S. & S.X. Zhang. (1999) The design and evaluation of a high-performance soft keyboard. Proceedings of CHI'99: ACM Conference on Human Factors in Computing Systems. 1999. p. 25-31.

Sears, A., J.A. Jacko, J. Chu, and F. Moro (2001) The role of visual search in the design of effective soft keyboards. Behaviour and Information Technology, 2001. 20(3): p. 159-166.

Zhai, S., B.A. Smith, and M. Hunter (2001). Performance Optimization of Virtual Keyboards. Human-Computer Interaction, 2001.

Comments? Contact Tom@MeasuringUX.com.